Horizon on VMware Cloud on AWS Architecture

This chapter is one of a series that make up the VMware Workspace ONE and VMware Horizon Reference Architecture, a framework that provides guidance on the architecture, design considerations, and deployment of VMware Workspace ONE® and VMware Horizon® solutions. This chapter provides information about architecting VMware Horizon on VMware Cloud on Amazon Web Services (AWS). A companion chapter, Horizon on VMware Cloud on AWS Configuration, provides information about deployment and configuration of VMware Horizon on VMware Cloud on AWS.

Introduction

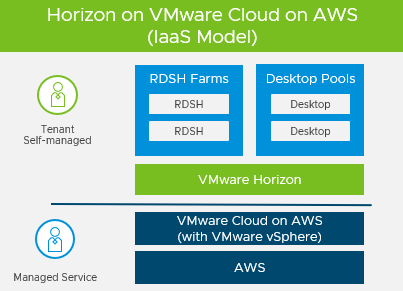

VMware Horizon on VMware Cloud on Amazon Web Services (AWS) delivers an integrated hybrid cloud for virtual desktops and applications. VMware Cloud on AWS is based on VMware Cloud Foundation and provides a fully supported, customizable cloud environment for VMware deployments and migrations. The solution delivers a full-stack software-defined data center (SDDC), including VMware vCenter, vSphere ESXi, NSX, and vSAN, delivered as a service on AWS.

Combined VMware Cloud on AWS and VMware Horizon gives a simple, secure, and scalable solution, that can easily address use cases such as on-demand capacity, disaster recovery, and cloud co-location without buying additional data center resources.

For customers who are already familiar with Horizon or have Horizon deployed on-premises, deploying Horizon on VMware Cloud on AWS lets you leverage a common architecture and familiar tools. This means that you use what you know from VMware vSphere and Horizon for operational consistency and leverage the same rich feature set and flexibility you expect. By outsourcing the management of the vSphere platform to VMware, you can simplify management of Horizon deployments. For more information about VMware Horizon for VMware Cloud on AWS, visit the Horizon on VMware Cloud on AWS product page.

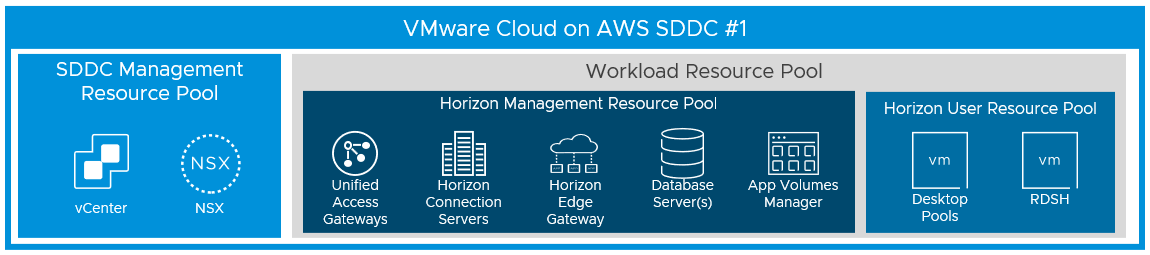

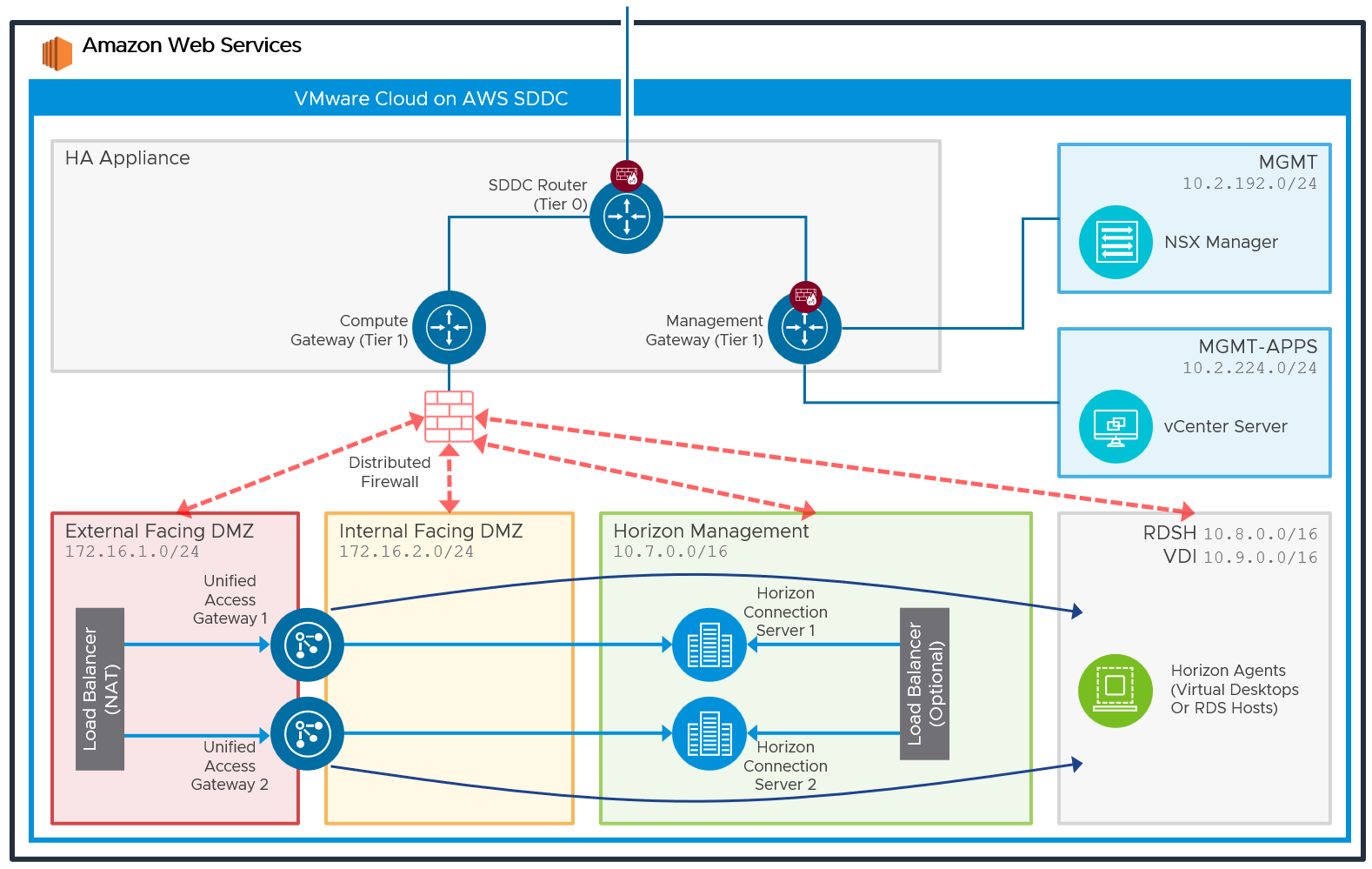

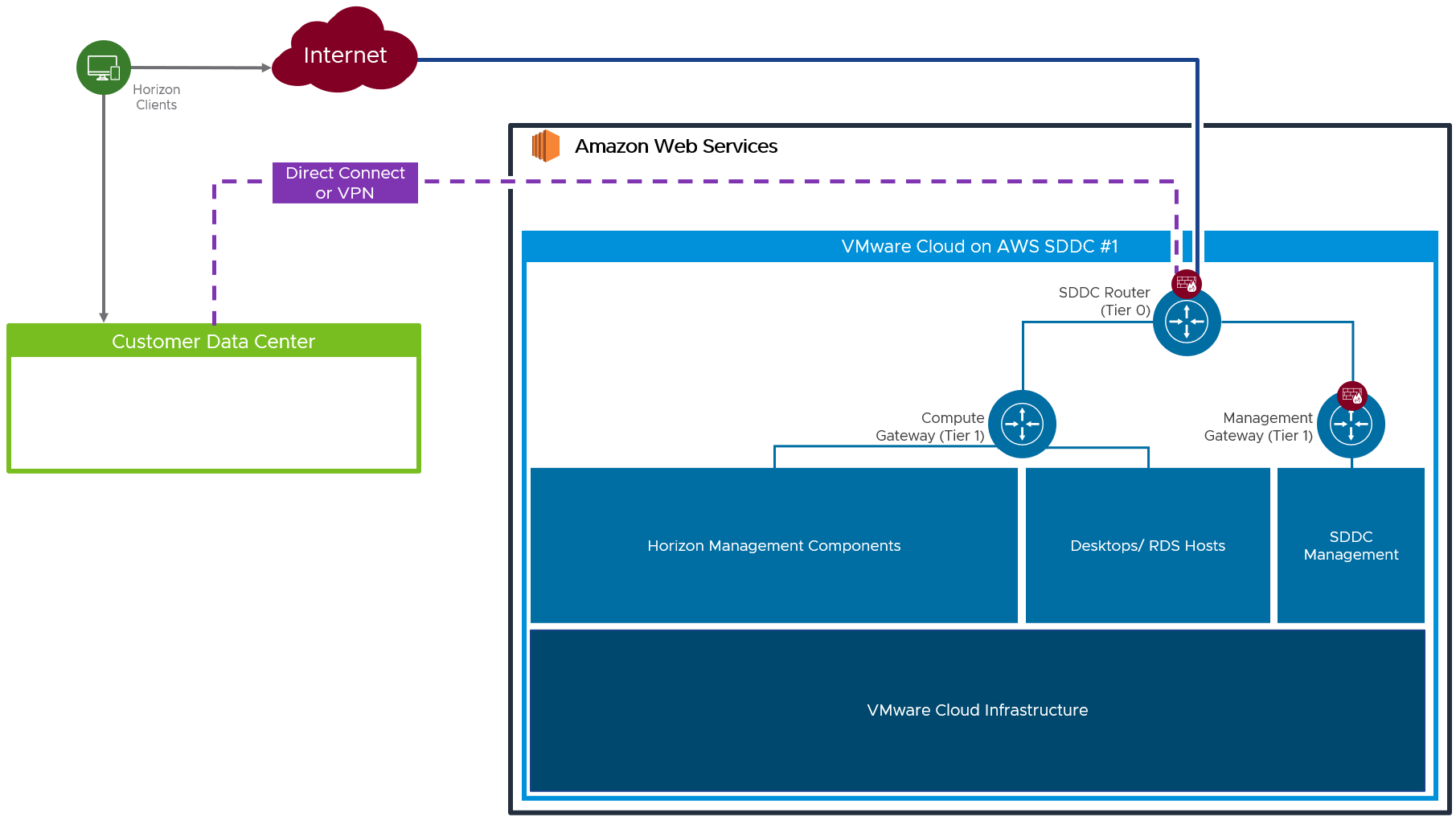

Figure 1: Horizon on VMware Cloud on AWS

For details on feature parity between Horizon on-premises and Horizon on VMware Cloud on AWS, as well as interoperability of Horizon and VMware Cloud versions, see the VMware Knowledge Base article Horizon on VMware Cloud on AWS Support (58539).

The purpose of this design chapter is to provide a set of best practices on how to design and deploy Horizon on VMware Cloud on AWS. This content is designed to be used in conjunction with Horizon documentation and VMware Cloud on AWS documentation.

| Important | It is highly recommended that you review the design concepts covered in the Horizon 8 Architecture chapter. This chapter builds on that one and only covers specific information for Horizon on VMware Cloud on AWS. |

For more information and technical guidance on VMware Cloud on AWS, see VMware Cloud: An Architectural Guide.

Deployment Options

There are two possible deployment architectures for Horizon on VMware Cloud on AWS:

- All-in-SDDC Architecture - Where all the Horizon components are located inside the VMware Cloud on AWS Software Defined Data Centers (SDDCs).

- Federated Architecture - Where the Horizon management components are located in Amazon EC2 and the Horizon resources (desktops and RDS Hosts for published applications) are located in the SDDCs.

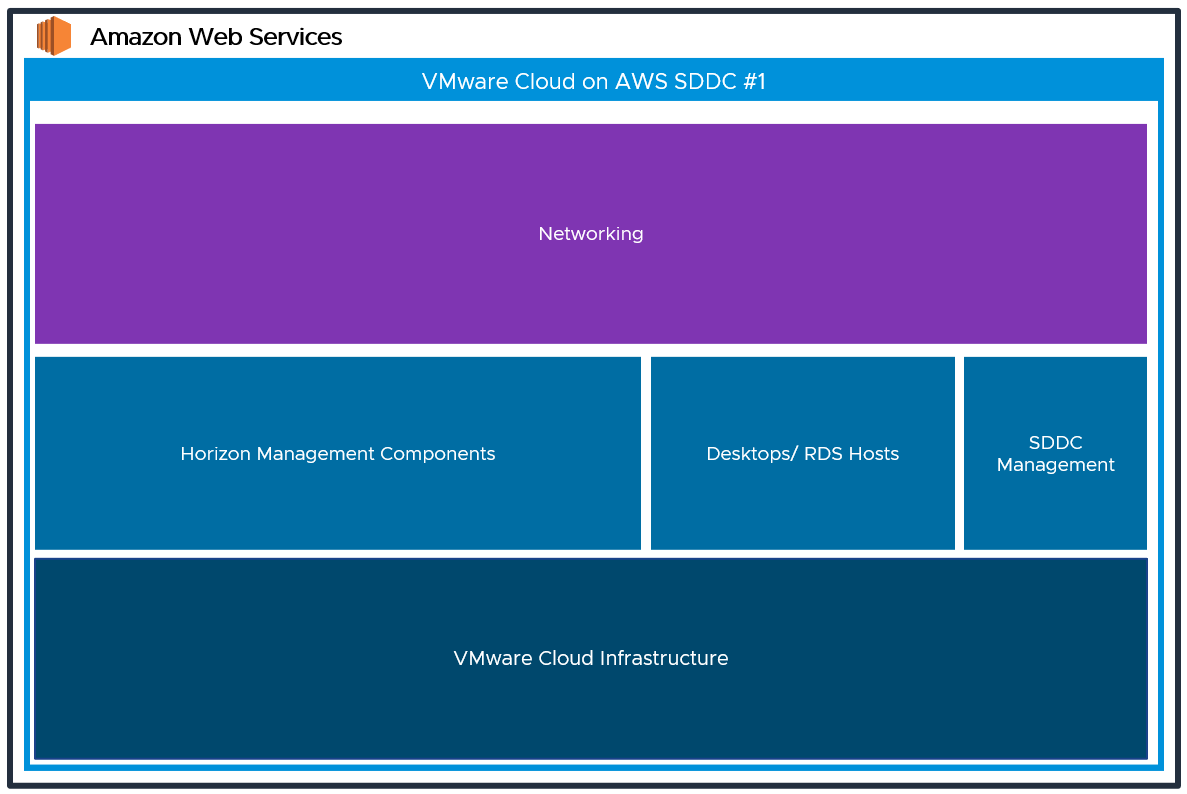

All-in-SDDC

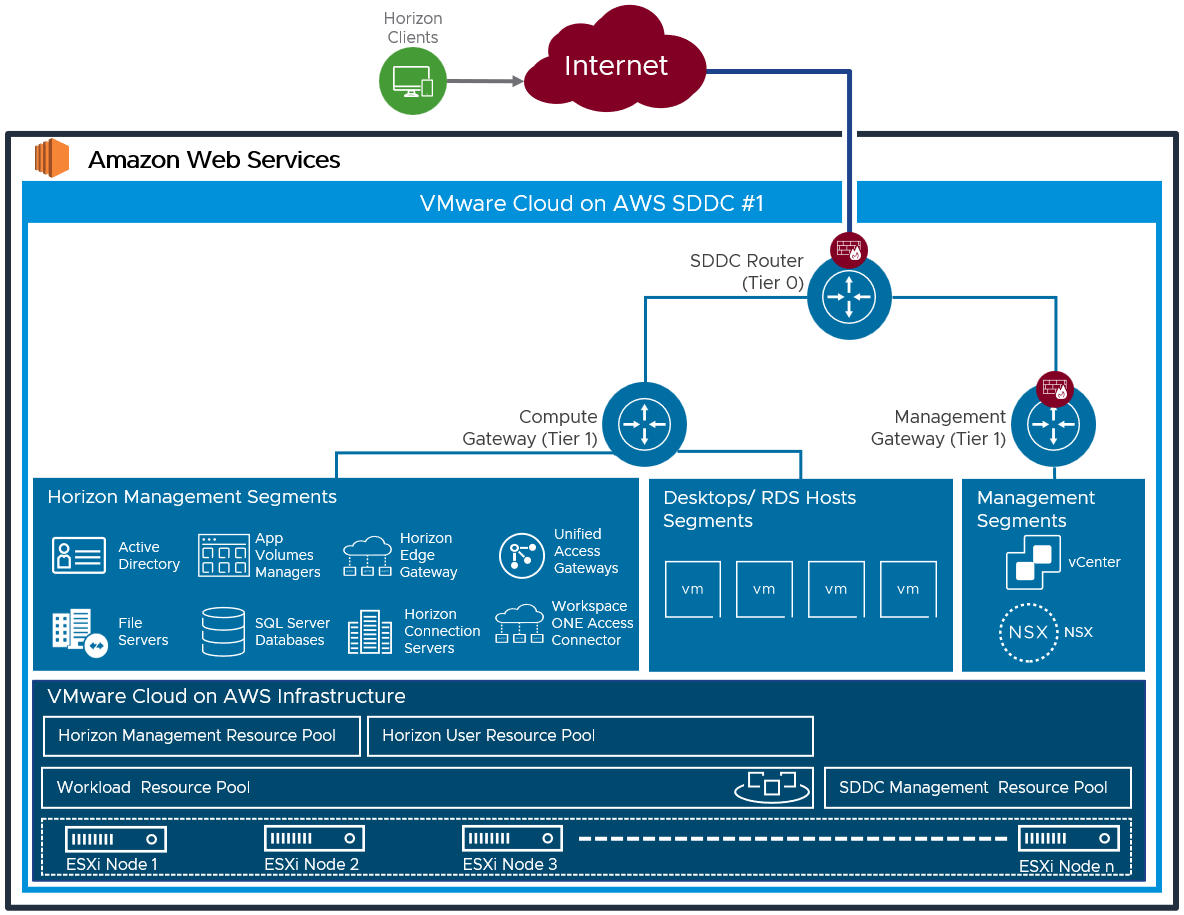

In the All-in-SDDC architecture, all Horizon components including management are located inside the VMware Cloud on AWS Software-Defined Data Centers (SDDCs). The following figure shows the high-level logical architecture of this deployment architecture. This guide will explore and cover the detail for each of the areas shown.

Figure 2: All-in-SDDC High-Level Logical Architecture

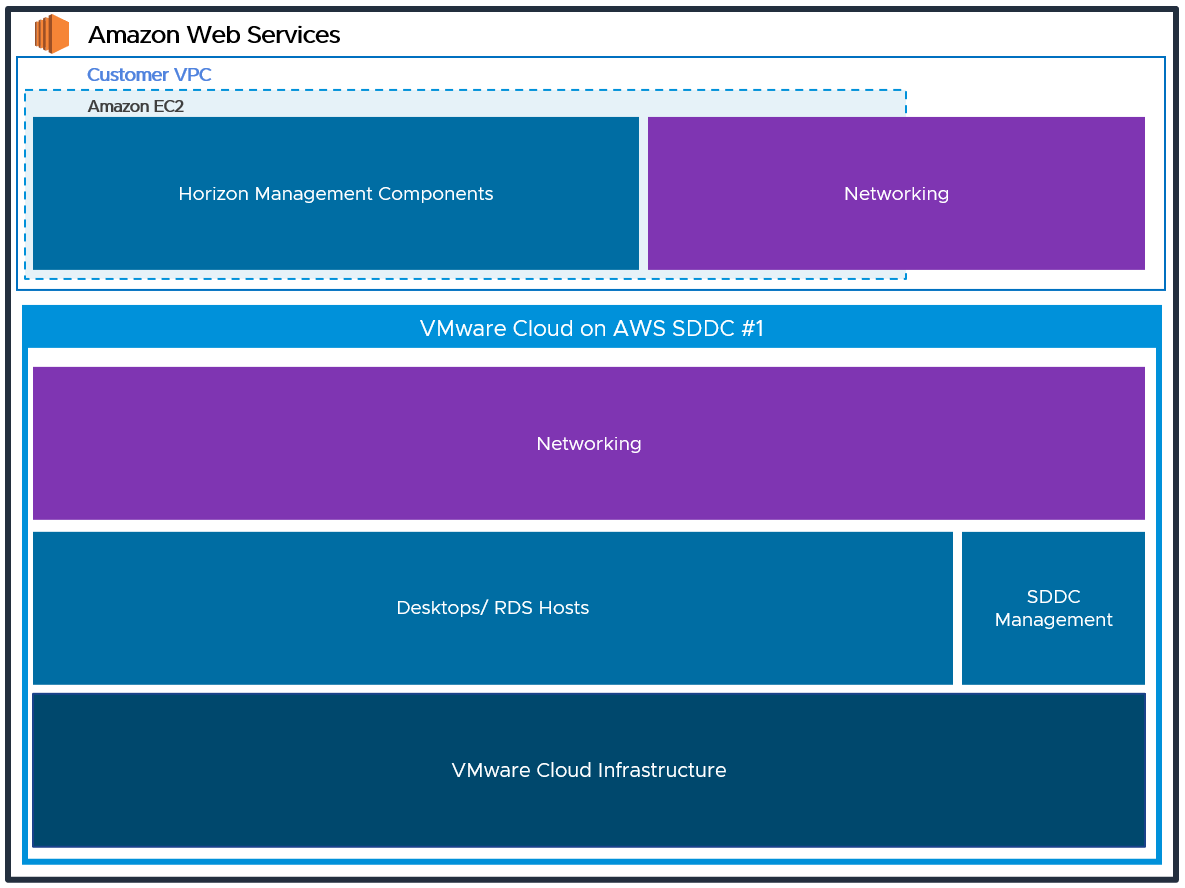

Federated Architecture

In the Federated architecture, the Horizon components are located in Amazon EC2 in the customer VPC. The SDDC is used to host virtual desktops and RDS hosts for published applications. The following figure shows the high-level logical architecture of this deployment architecture.

Figure 3: Federated High-Level Logical Architecture

Reference Architecture Deployment Choice

This guide focuses on the All-in-SDDC architecture and deployment option. For more information on the Federated architecture, reach out to your VMware sales team.

Table 1: Horizon on VMware Cloud on AWS Deployment Strategy

| The All-in-SDDC architecture option was used in this reference architecture. | |

| Justification | The All-in-SDDC Architecture addressed the scale required and is simpler to deploy |

Architectural Overview

In this section, we discuss the various components, including individual server components used for Horizon, single or multiple vSphere Software-Defined Data Centers (SDDC), management and compute components, NSX-T components, and resource pools.

Components

The individual server components used for Horizon, whether deployed on VMware Cloud on AWS or on-premises, are the same. See the Components section in the Horizon 8 Architecture for details on the common server components.

Check the knowledge base article 58539 for feature parity of Horizon on VMware Cloud on AWS. The components and features that are specific to Horizon on VMware Cloud on AWS are described in this section.

Software-Defined Data Centers (SDDC)

VMware Cloud on AWS allows you to create vSphere Software-Defined Data Centers (SDDCs) on Amazon Web Services. These SDDCs include VMware vCenter Server for VM management, VMware vSAN for storage, and VMware NSX for networking.

You can connect an on-premises SDDC to a cloud SDDC and manage both from a single VMware vSphere Web Client interface. Using a connected AWS account, you can access AWS services such as EC2 and S3 from virtual machines in your SDDC. For more information, see the VMware Cloud on AWS documentation.

Once you have deployed an SDDC on VMware Cloud on AWS, you can deploy Horizon in that cloud environment just like you would in an on-premises vSphere environment. This enables Horizon customers to outsource the management of the SDDC infrastructure to VMware. There is no requirement to purchase new hardware, and you can use the pay-as-you-go option for hourly billing on VMware Cloud on AWS.

Management Component

The management component for the network includes vCenter Server.

Compute Component

The compute component includes the following Horizon infrastructure components:

- Horizon Connection Servers

- Unified Access Gateway appliances

- App Volume Managers

- Horizon Edge Gateway Appliance (used when connecting to Horizon Cloud Service – next-gen)

- Horizon Cloud Connector Appliance (used when connecting to Horizon Cloud Service – first-gen)

- Load balancer

- Virtual machines

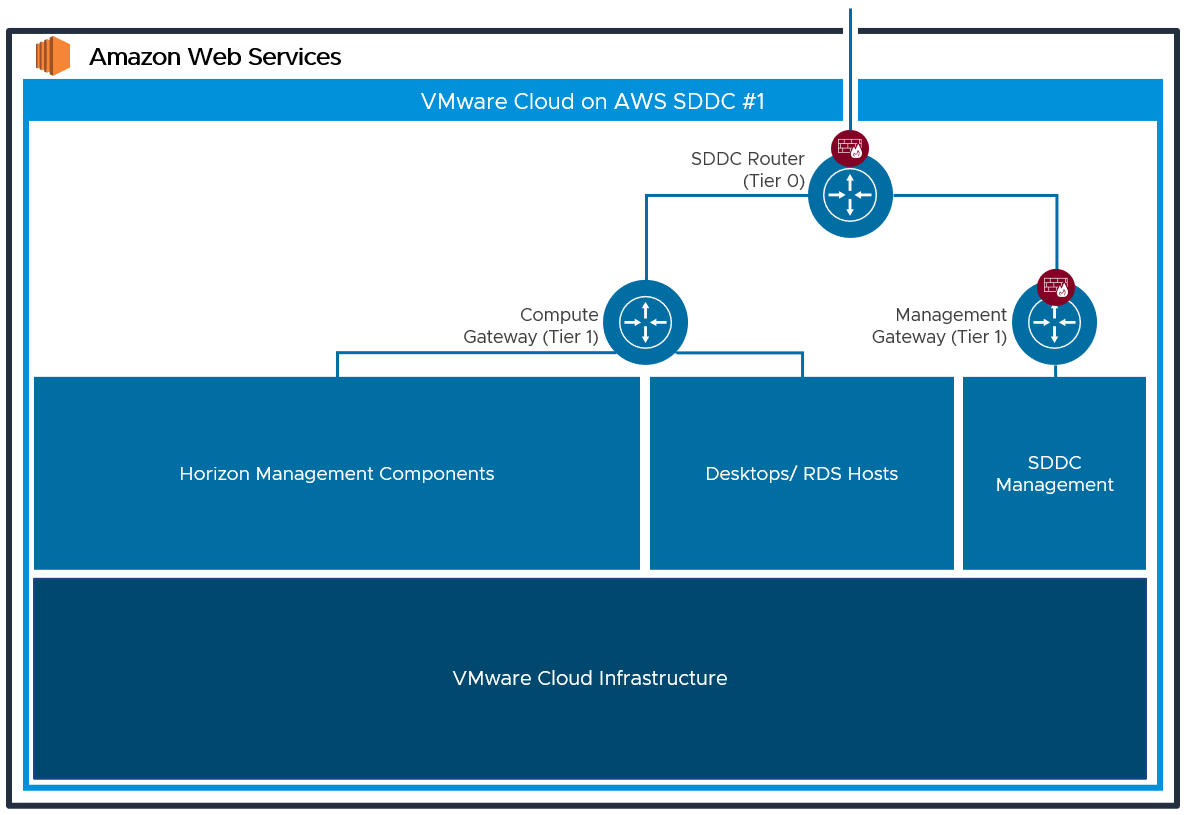

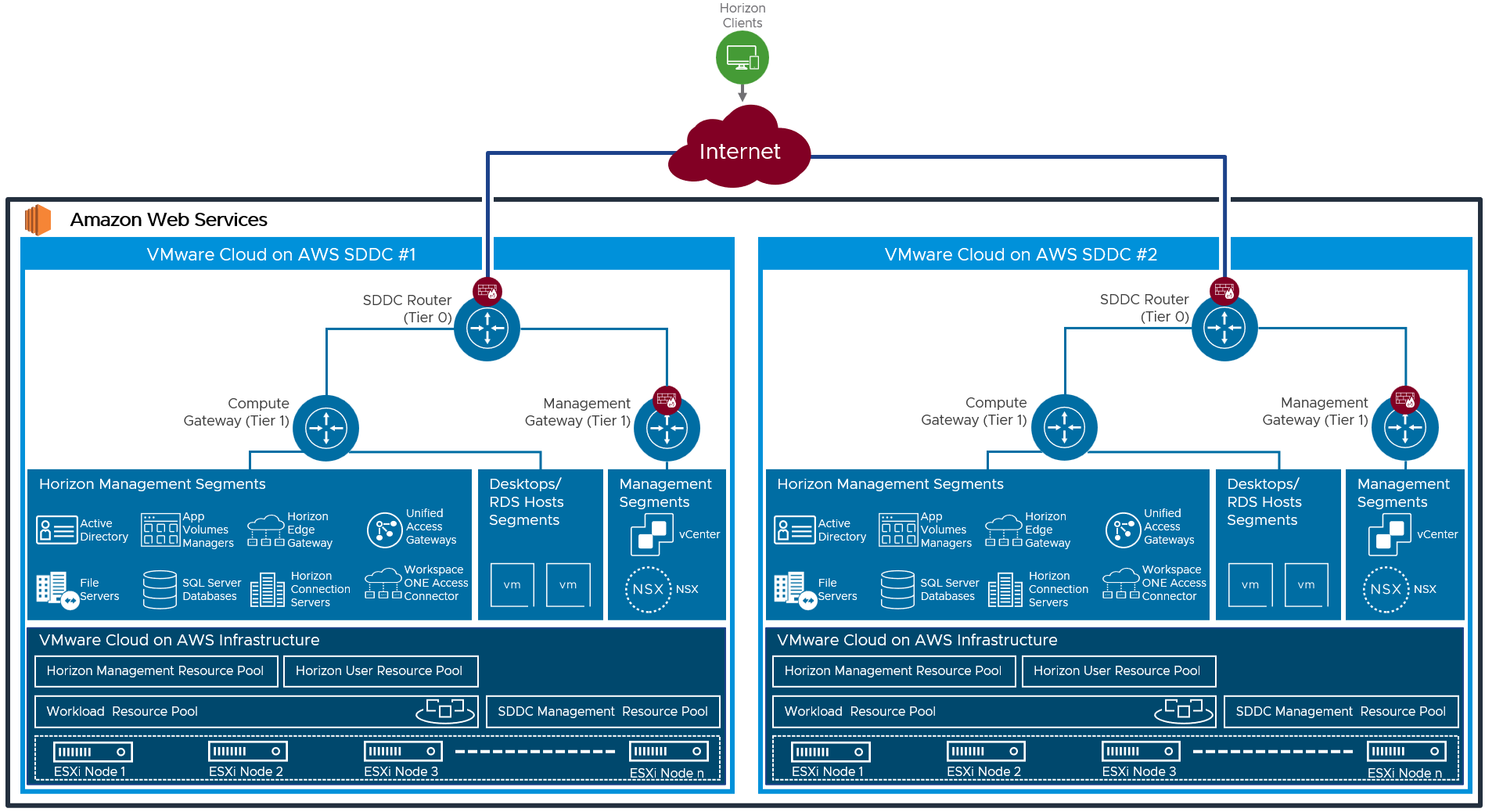

NSX-T Components

VMware NSX-T is the network virtualization platform for the Software-Defined Data Center (SDDC), delivering networking and security entirely in software, abstracted from the underlying physical infrastructure.

- Tier-0 router – Handles Internet, route- or policy-based IPSEC VPN, AWS Direct Connect, and also serves as an edge firewall for the Tier-1 Compute Gateway (CGW).

- Tier-1 Compute Gateway (CGW) – Serves as a distributed firewall for all customer internal networks.

- The Tier-1 Management Gateway (MGW) – Serves as a firewall for the VMware-maintained components like vCenter and NSX.

Figure 4: NSX-T Components in the SDDC

The maximum number of ports per logical network is 1000. Currently, multiple VLANs for a single Horizon pool are not supported on NSX-T, so the maximum size of the Horizon pool is limited to 1000. Of course, you can create multiple pools using different logical networks.

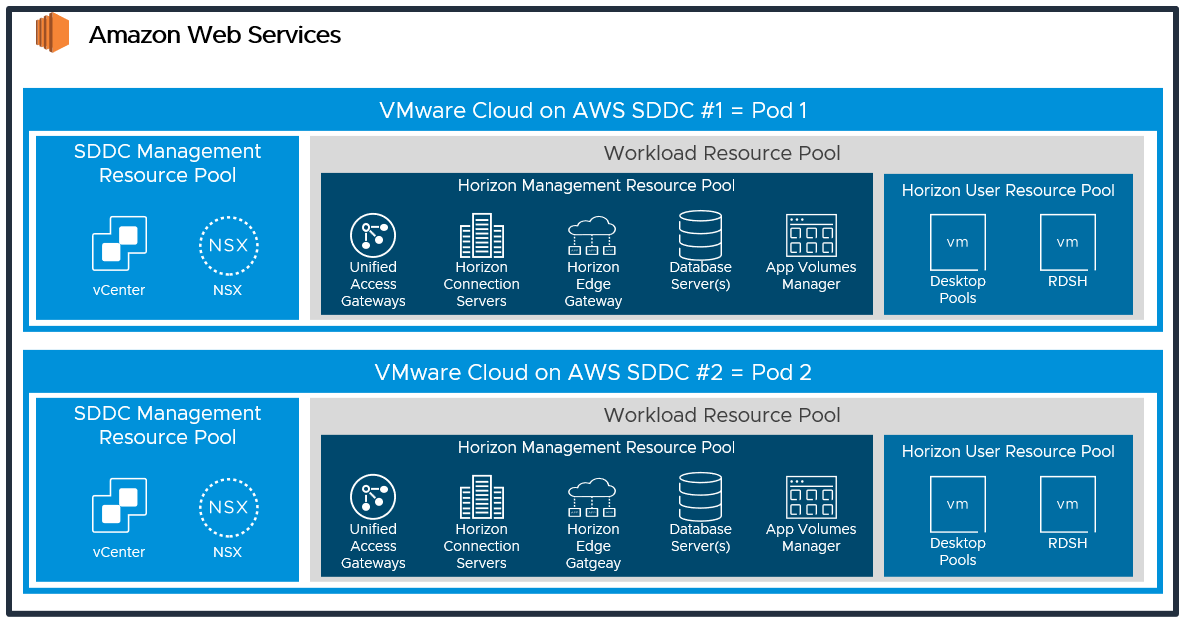

Resource Pools

A resource pool is a logical abstraction for flexible management of resources. Resource pools can be grouped into hierarchies and used to hierarchically partition available CPU and memory resources.

Within a Horizon pod on VMware Cloud on AWS, you can use vSphere resource pools to separate management components from virtual desktops or published applications workloads to make sure resources are allocated correctly.

After an SDDC instance on VMware Cloud on AWS is created, two resource pools exist:

- A Management Resource Pool with reservations that contains vCenter Server plus NSX, which is managed by VMware

- A Compute Resource Pool within which everything is managed by the customer

When using the All-in-SDDC architecture and deploying both management components and user resources in the same SDDC, it is recommended to create two sub-resource pools within the Compute Resource Pool for your Horizon deployments:

- A Horizon Management Resource Pool for your Horizon management components, such as connection servers

- A Horizon User Resource Pool for your desktop pools and published apps

Figure 5: Resource Pools for All-in-SDDC Horizon on VMware Cloud on AWS

Scalability and Availability

In this section, we discuss the concepts and constructs that can be used to provide scalability of Horizon.

Block and Pod

A key concept of Horizon, whether deployed on VMware Cloud on AWS or on-premises is the use of blocks and pods. See the Pod and Block section in Horizon 8 Architecture.

Table 2: Block and Pod Strategy

| Decision | A single pod containing one resource block (one SDDC) was deployed. |

| Justification | In the All-in-SDDC architecture, all management components are inside of the SDDC. With the All-in SDDC architecture, it is recommended to size for one SDDC per Horizon Pod. This helps to mitigate against protocol traffic hairpinning between SDDCs. See Scaling Deployments and Protocol Traffic Hairpinning for more information. |

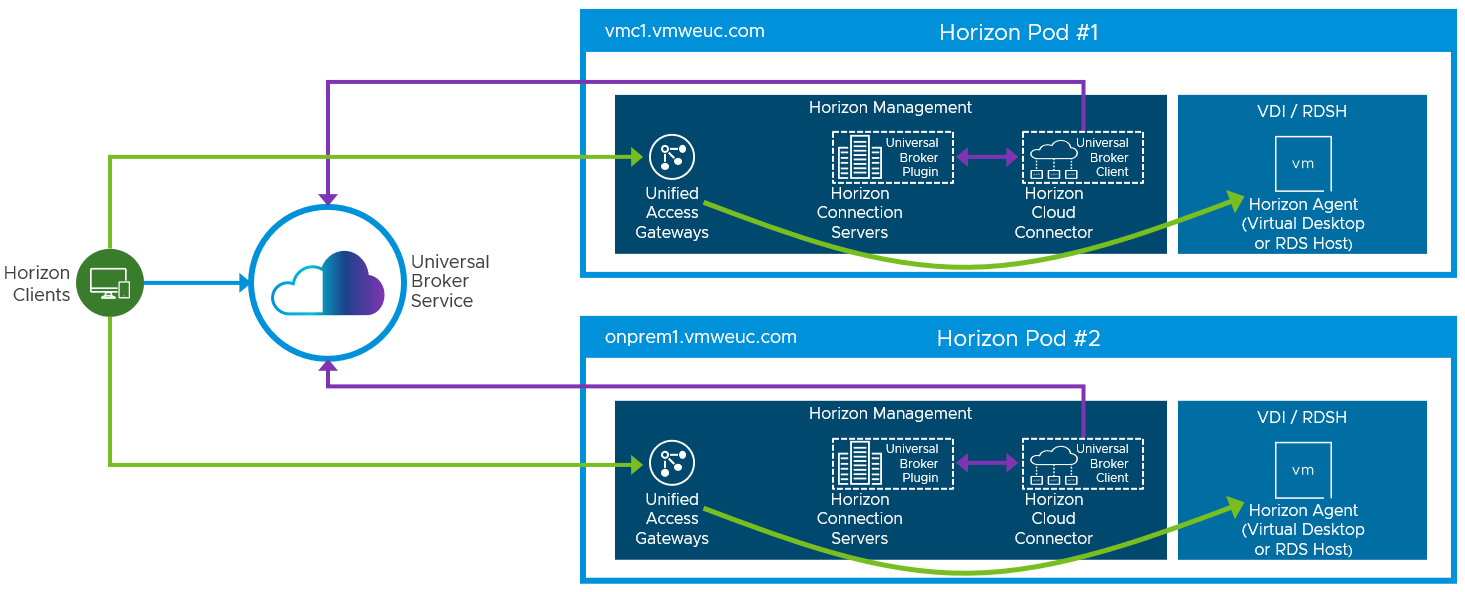

Horizon Universal Broker

The Horizon Universal Broker is a feature of Horizon Cloud Service – first-gen, that allows you to broker desktops and applications to end users across all cloud-connected Horizon pods. For more information, see the Horizon Universal Broker section of the Horizon 8 Architecture chapter, and the Horizon Universal Broker section of the First-Gen Horizon Control Plane Architecture chapter.

Note: At the time of writing, the brokering service from Horizon Cloud Service – next-gen is not supported with Horizon 8 pods and resources.

The Horizon Universal Broker is the cloud-based brokering technology used to manage and allocate Horizon resources from multi-cloud assignments to end users. It allows users to authenticate and access their assignments by connecting to a single fully qualified domain name (FQDN) and then get directed to the individual Horizon pods that will deliver their virtual desktop or published applications. To facilitate user connections to Horizon resources, each Horizon Pod has its own unique external FQDN. See Scaling Deployments.

Figure 6: Universal Broker Sample Architecture

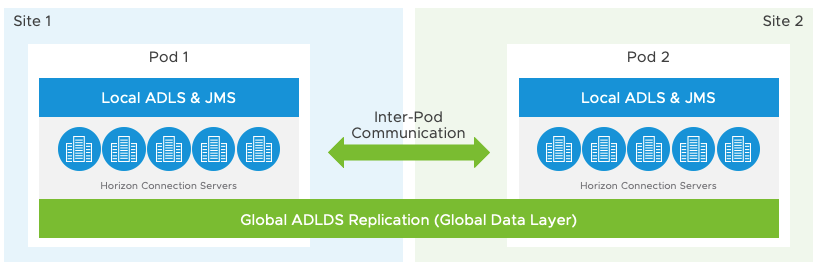

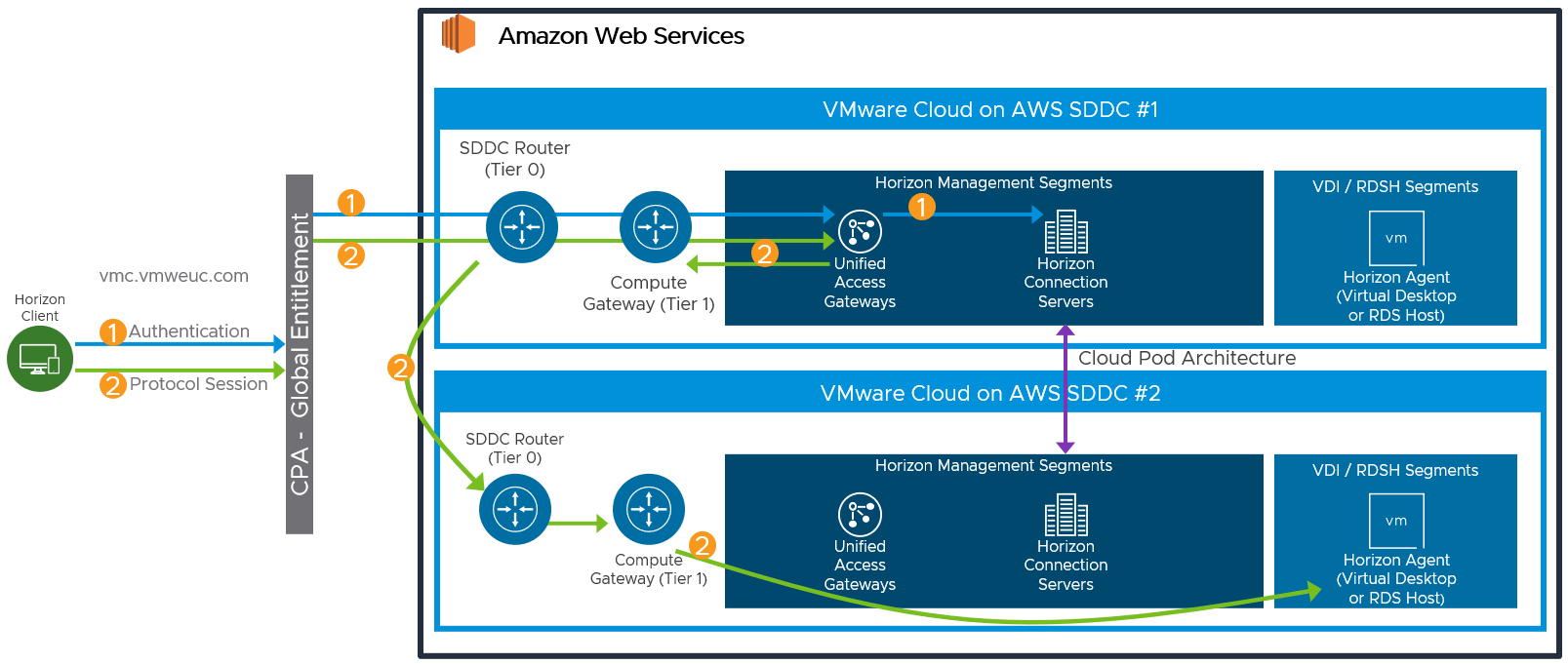

Cloud Pod Architecture

When scaling out with multiple Horizon pods, an alternative to Universal Broker is to use Cloud Pod Architecture (CPA). This allows you to connect a Horizon deployment across multiple pods and sites for federated management and user assignment.

CPA can be used to scale up a deployment, to build hybrid cloud, and to provide redundancy for business continuity and disaster recovery. CPA introduces the concept of a global entitlement (GE) that spans the federation of multiple Horizon pods and sites. Any users or user groups belonging to the global entitlement are entitled to access virtual desktops and RDS published apps on multiple Horizon pods that are part of the CPA.

Note: You cannot entitle the same Horizon resource with both Cloud Pod Architecture/ global entitlements and with Universal Broker/ multi-cloud assignments.

Important: CPA is not a stretched deployment; each Horizon pod is distinct and all Connection Servers belonging to each of the individual pods are required to be located in a single location and to run on the same broadcast domain from a network perspective.

Here is a logical overview of a basic two-site/two-pod CPA implementation. For VMware Cloud on AWS, Site 1 and Site 2 might be different AWS regions, or Site 1 might be on-premises and Site 2 might be on VMware Cloud on AWS.

Figure 7: Cloud Pod Architecture

For the full documentation on how to set up and configure CPA, refer to Administering View Cloud Pod Architecture in the Horizon documentation and the Cloud Pod Architecture section in Horizon Configuration.

Table 3: Cloud Pod Architecture Strategy

| Decision | Cloud Pod Architecture was not used in this reference architecture. |

| Justification | Universal Broker and multi-cloud assignments were used instead to allow scaling to multiple Horizon Pods or environments while providing simple user access. |

Linking Horizon Pods with CPA

You can use the Cloud Pod Architecture feature to connect Horizon pods regardless of whether the pods are on-premises or on VMware Cloud on AWS. When you deploy two or more Horizon pods on VMware Cloud on AWS, you can manage them independently or manage them together by linking them with Cloud Pod Architecture.

- On one Connection Server, initialize Cloud Pod Architecture and join the Connection Server to a pod federation.

- Once initialized, you can create a global entitlement across your Horizon pods on-premises and on VMware Cloud on AWS.

- Optionally, when you use Cloud Pod Architecture, you can deploy a global load balancer (such as F5, AWS Route 53, or others) between the pods. The global load balancer provides a single-namespace capability that allows the use of a common global namespace when referring to Horizon CPA. Using CPA with a global load balancer provides your end users with a single connection method and desktop icon in their Horizon Client or Workspace ONE console.

Without the global load balancer and the ability to have a single namespace for multiple environments, end users will be presented with a possibly confusing array of desktop icons (corresponding to the number of pods on which desktops have been provisioned for them). For more information on how to set up Cloud Pod Architecture, see the Cloud Pod Architecture in Horizon.

Use Cloud Pod Architecture to link any number of Horizon pods on VMware Cloud on AWS. The maximum number of pods must conform to the limits set for pods in Cloud Pod Architecture. For the most current numbers for Horizon 8, see the Horizon 8 2206 Configuration Limits. For Horizon 7, see the VMware Knowledge Base article VMware Horizon 7 Sizing Limits and Recommendations (2150348).

When you connect multiple Horizon pods together with Cloud Pod Architecture, the Horizon versions for each of the pods can be different from one another.

Using CPA to Build Hybrid Cloud and Scale for Horizon

You can deploy Horizon in a hybrid cloud environment when you use CPA to interconnect Horizon on-premises and Horizon pods on VMware Cloud on AWS. You can easily entitle your users to virtual desktop and RDS published apps on-premises and/or on VMware Cloud on AWS. You can configure it such that they can connect to whichever site is closest to them geographically as they roam.

You can also stretch CPA across Horizon pods in two or more VMware Cloud on AWS data centers with the same flexibility to entitle your users to one or multiple pods as desired.

To configure Cloud Pod Architecture between Horizon deployments on VMware Cloud on AWS and on-premises locations, follow these steps.

- Configure VPN and firewall rules to enable the Connection Server instances on VMware Cloud on AWS to communicate with the Connection Server instances in the on-premises data centers.

- Ensure that your on-premises Horizon version is 7.0 or later.

Note: The Horizon version deployed on-premises does not have to match the Horizon version deployed on VMware Cloud on AWS. However, you cannot mix a Horizon 6 pod (or lower) with a Horizon 8 or 7 pod within the same CPA. - Use Cloud Pod Architecture to connect the other Horizon pods with the Horizon pod on VMware Cloud on AWS.

Of course, use of CPA is optional. You can choose to deploy Horizon exclusively in a single VMware Cloud on AWS data center without linking it to any other data center or use the Universal Broker with multi-cloud assignments.

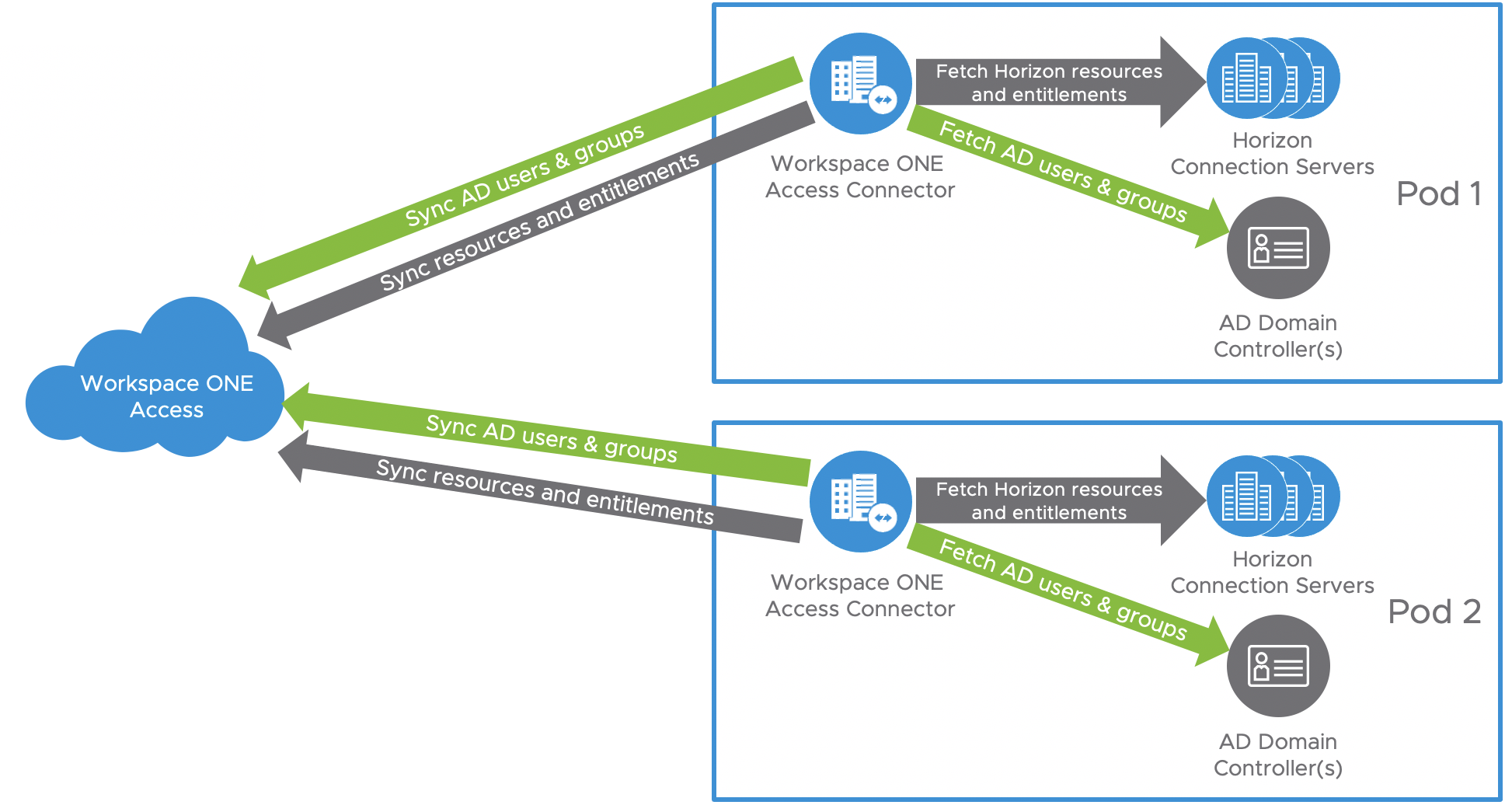

Workspace ONE Access

Workspace ONE® Access can be used to broker Horizon resources. In this case, the entitlements and resources are synched to Workspace ONE Access, and Workspace ONE Access knows the FQDN of each Horizon Pod to properly direct users to their desktop or application resources. Workspace ONE Access can also be used in combination with Universal Broker with Multi-Cloud Assignments or with Cloud Pod Architecture and Global Entitlements.

For more design details, see Horizon and Workspace ONE Access Integration in the Platform Integration chapter.

Figure 8: Syncing Horizon Resources into Workspace ONE Access

Sizing Horizon on VMware Cloud on AWS

Similar to deploying Horizon on-premises, you need to size your requirements for deploying Horizon on VMware Cloud on AWS to determine the number of hosts you need to deploy. Hosts are needed for the following purposes:

- Your virtual desktop or RDS workloads.

- Your Horizon infrastructure components, such as connection servers, Unified Access Gateways, App Volumes managers.

- SDDC infrastructure components on VMware Cloud on AWS. These components are deployed and managed automatically for you by VMware, but you need capacity in your SDDC for running them.

The methodology for sizing Horizon on VMware Cloud on AWS is the same as for on-premises deployments. The difference is the fixed hardware configurations on VMware Cloud on AWS. Work with your VMware sales team to determine the correct sizing.

At the time of writing, the minimum number of hosts required per SDDC on VMware Cloud on AWS for production use is 3 nodes (hosts). For testing purposes, a 1-node SDDC is also available. However, since a single node does not support HA, we do not recommend it for production use. Horizon can be deployed on a single-node SDDC or a multi-node SDDC. If you are deploying on a single-node SDDC, make sure to change the FTT policy setting on vSAN from 1 (default) to 0.

Network Configuration

When SDDCs are deployed on VMware Cloud on AWS, NSX-T is used for network configuration. After you deploy an SDDC instance, two isolated networks exist, a management network, and a compute network. Each has its own NSX Edge Gateway and an NSX Distributed Logical Router for extra networks in the compute section.

The recommended network architecture for Horizon in VMC on AWS consists of a double DMZ and a separation between Horizon management components and the RDSH and VDI virtual machines. When using the All-in-SDDC architecture, the network segments for the management components reside inside the SDDC as shown in the diagram below.

Figure 9: All-in-SDDC Logical Networking (Subnets are for Illustrative Purposes Only)

Network segments can be added using the VMware Cloud admin console for:

- External-DMZ

- Internal-DMZ

- Horizon-Management

- VDI and RDSH

Firewall Rules

The firewall service on VMware Cloud on AWS is based on NSX-T and provides both Distributed (Micro-segmentation) and Gateway Firewall Services.

To simplify the management of Gateway Firewall, VMware recommend using Groups (located under Networking & Security -- Inventory) both for Compute and Management. For details, see Firewall Rules in Horizon on VMware Cloud on AWS Configuration.

Note: The default behavior of both Management and Gateway Firewalls is set to deny all traffic not explicitly enabled.

Management Gateway Firewall

The Management Gateway Firewall controls network traffic for the SDDC management components, such as the vCenter Server and NSX Manager. Because the Horizon Connection Servers must communicate with the vCenter Server, firewall rules must be allowed on the Management Gateway Firewall (MGW) to allow traffic from the Horizon management components to the vCenter Servers and vSphere ESXi hosts.

See Firewall Rules for detail on the rules required. See Configure Management Gateway Networking and Security for more details.

Compute Gateway Firewall

The Compute Gateway Firewall runs on the SDDC router (Tier 0) and provides firewalling for the Compute Gateway and the network segments defined on it.

See Firewall Rules and Configure Compute Gateway Networking and Security for more details.

Distributed Firewall

The network segments you define for the Horizon management components and the RDSH and VDI virtual machines are attached to the Compute Gateway network, and all traffic will be allowed between these segments by default. The NSX Distributed Firewall should be used to apply rules to restrict the traffic between the network segments, the VMs, and the required network ports.

Check the Add or Modify Distributed Firewall Rules section of the VMware Cloud on AWS Product Documentation for more information on configuring Distributed Firewall.

See Network Ports in VMware Horizon for details on the network ports required between Horizon components.

Unified Access Gateways

To ensure redundancy and scale, multiple Unified Access Gateways are deployed. To implement them in a highly available configuration and provide a single namespace, either the high availability feature built into Unified Access Gateway or a third-party load balancer, such as AWS Elastic Load Balancer (ELB) or NSX Advanced Load Balancer (Avi), can be used.

See the Load Balancing Unified Access Gateway section of the Horizon 8 Architecture chapter and the High Availability section of the Unified Access Gateway Architecture chapter.

Table 4: Load Balancing Unified Access Gateways Strategy

| Decision | Unified Access Gateway High Availability was used to present a single virtual IP and namespace for the Unified Access Gateways. |

| Justification | Using Unified Access Gateway HA simplifies the deployment. HA with two Unified Access Gateways provides the required scale and capability of an individual Horizon pod within an SDDC. |

To provide direct external access, a public IP address would be configured with Network Address Translation (NAT) to forward traffic to either the Unified Access Gateway HA virtual IP address or to the load balancer virtual IP address.

When using Unified Access Gateway HA, the individual Unified Access Gateway appliances also each require a public IP address and NAT configured to route the Horizon protocol traffic to them.

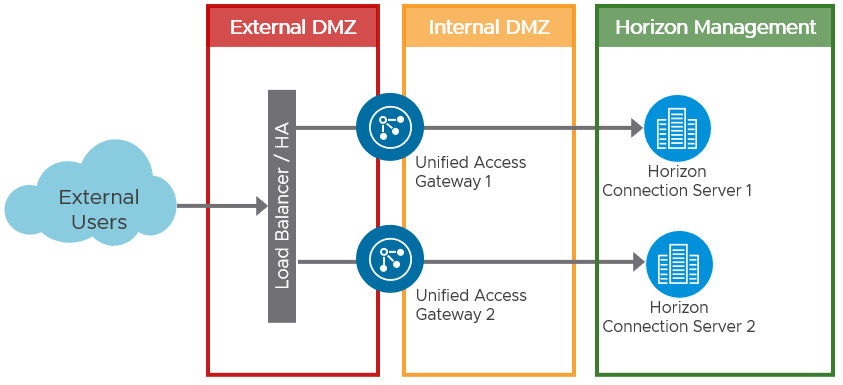

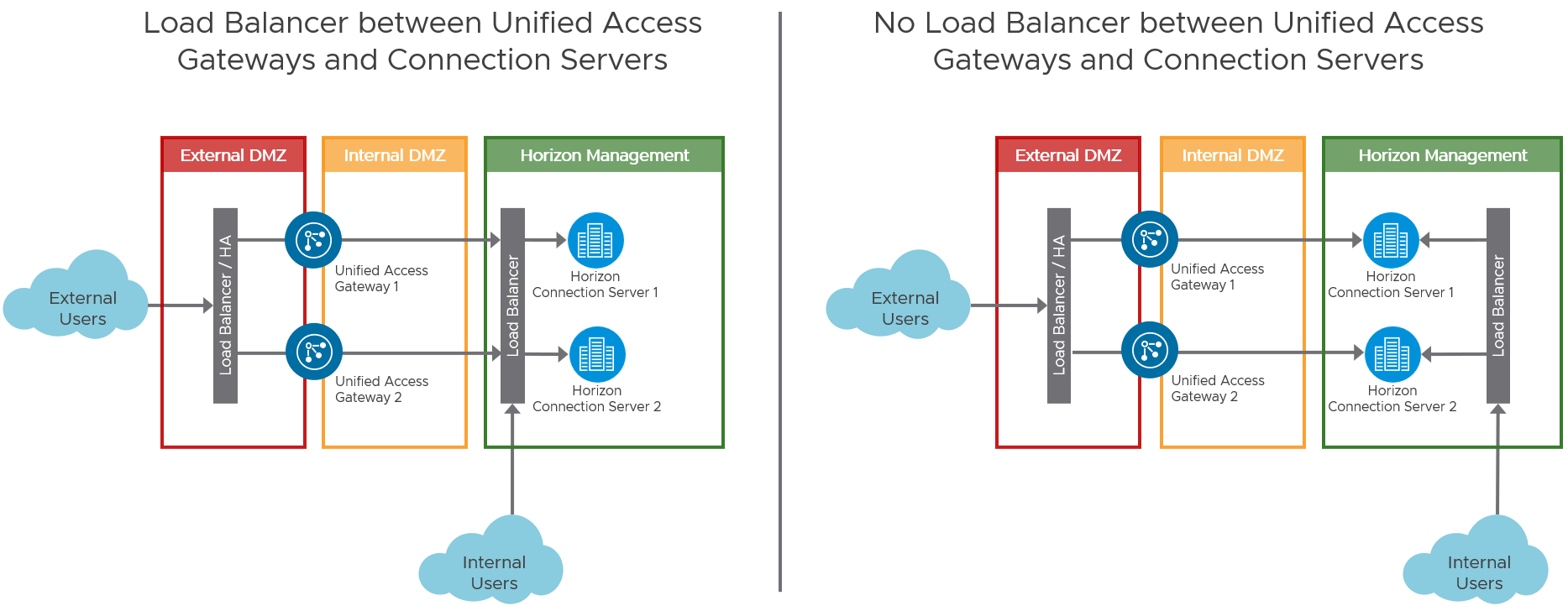

Load Balancing Connection Servers

Multiple Connection Servers are deployed for scale and redundancy. Depending on the user connectivity, you may or may not need to deploy a third-party load balancer to provide a single namespace for the Connection Servers. When all user connections originate externally and come through a Unified Access Gateway, it is not necessary to have a load balancer between the Unified Access Gateways and the Connection Servers. Each Unified Access Gateway can be defined with a specific Connection server as its destination.

Figure 10: Load Balancing when all Connections Originate Externally

Where users will be connecting from internally routed networks and their session will not go via a Unified Access Gateway, a load balancer should be used to present a single namespace for the Connection Servers. A third-party load balancer, such as AWS Elastic Load Balancer (ELB) or NSX Advanced Load Balancer (Avi), should be deployed. For more information, see the Load Balancing Connection Servers section of the Horizon 8 Architecture chapter.

When required for internally routed connections, a load balancer for the Connection Servers can be either:

- Placed in between the Unified Access Gateways and the Connection Server and used as an FQDN target for both internal users and also the Unified Access Gateways.

- Located so that only the internal users use it as an FQDN.

Figure 11: Options for Load Balancing Connection Servers for Internal Connections

Table 5: Load Balancing Connection Servers Strategy

| Decision | No load balancer was used with the Connection Servers. |

| Justification | All user sessions originate externally and are routed via the Unified Access Gateways. |

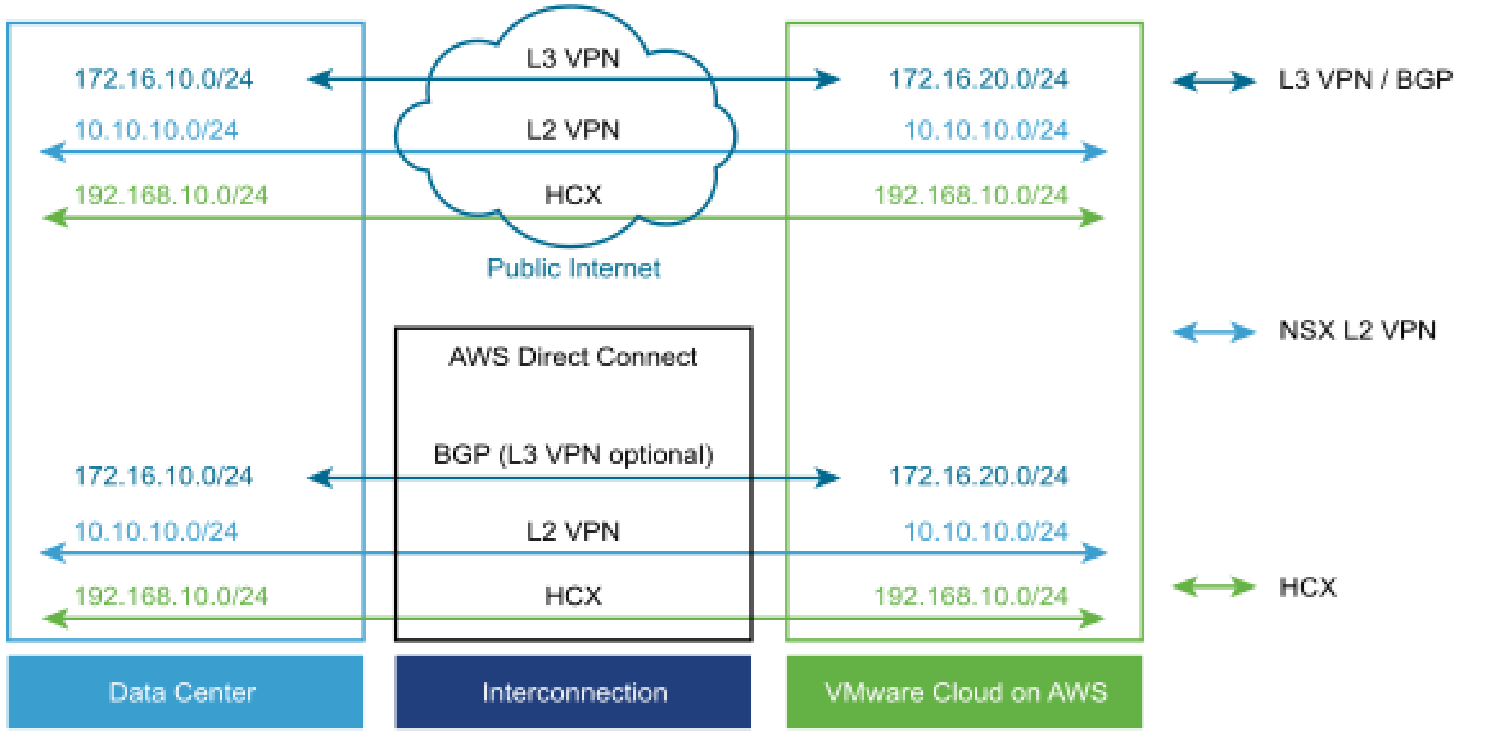

External Networking

- VPN (policy- or route-based) over public Internet

- VPN (policy- or route-based) over AWS Direct Connect

- AWS Direct Connect

Figure 12: VMware Cloud on AWS Network Connectivity for Horizon

The best option for you will depend on the requirements. For a predictable networking experience, AWS DX is recommended. Once the connection is established, ensure that routing configuration permits required traffic flow (such as all required subnets are correctly announced via BGP to VMware Cloud on AWS for AWS Direct Connect and route-based VPNs). Additional network capabilities provided by VMware Hybrid Cloud Extension (HCX) or L2VPN can be leveraged if needed. For more detail, see NSX-T Networking Concepts section of the VMware Cloud on AWS Product Documentation.

For an overview and technical details on external networking with VMware Cloud on AWS, see the Network module in the VMware Cloud: An Architectural Guide.

VPN

For external management or access to external resources, create a VPN or direct connection to the tier 0 router. You can configure a route-based IPsec VPN or a policy-based IPsec VPN.

- Route-based VPN uses the routed tunnel interface as the endpoint of the SDDC network to allow access to multiple subnets within the network. Local and remote networks are discovered using BGP advertisements.

- Policy-based VPN allows access to a subnet of the SDDC network.

Direct Connect

AWS Direct Connect (DX) is a cloud service solution that makes it easy to establish a dedicated network connection from your premises to AWS. Using AWS Direct Connect, you can establish private connectivity between AWS and your data center, office, or co-location environment, which in many cases can reduce your network costs, increase bandwidth throughput, and provide a more consistent network experience than Internet-based connections.

AWS Direct Connect lets you establish a dedicated network connection between your network and one of the AWS Direct Connect locations. Using industry standard 802.1q VLANs, this dedicated connection can be partitioned into multiple virtual interfaces. This allows you to use the same connection to access public resources such as objects stored in Amazon S3 using public IP address space, and private resources such as Amazon EC2 instances running within an Amazon Virtual Private Cloud (VPC) using private IP space, while maintaining network separation between the public and private environments. Virtual interfaces can be reconfigured at any time to meet your changing needs.

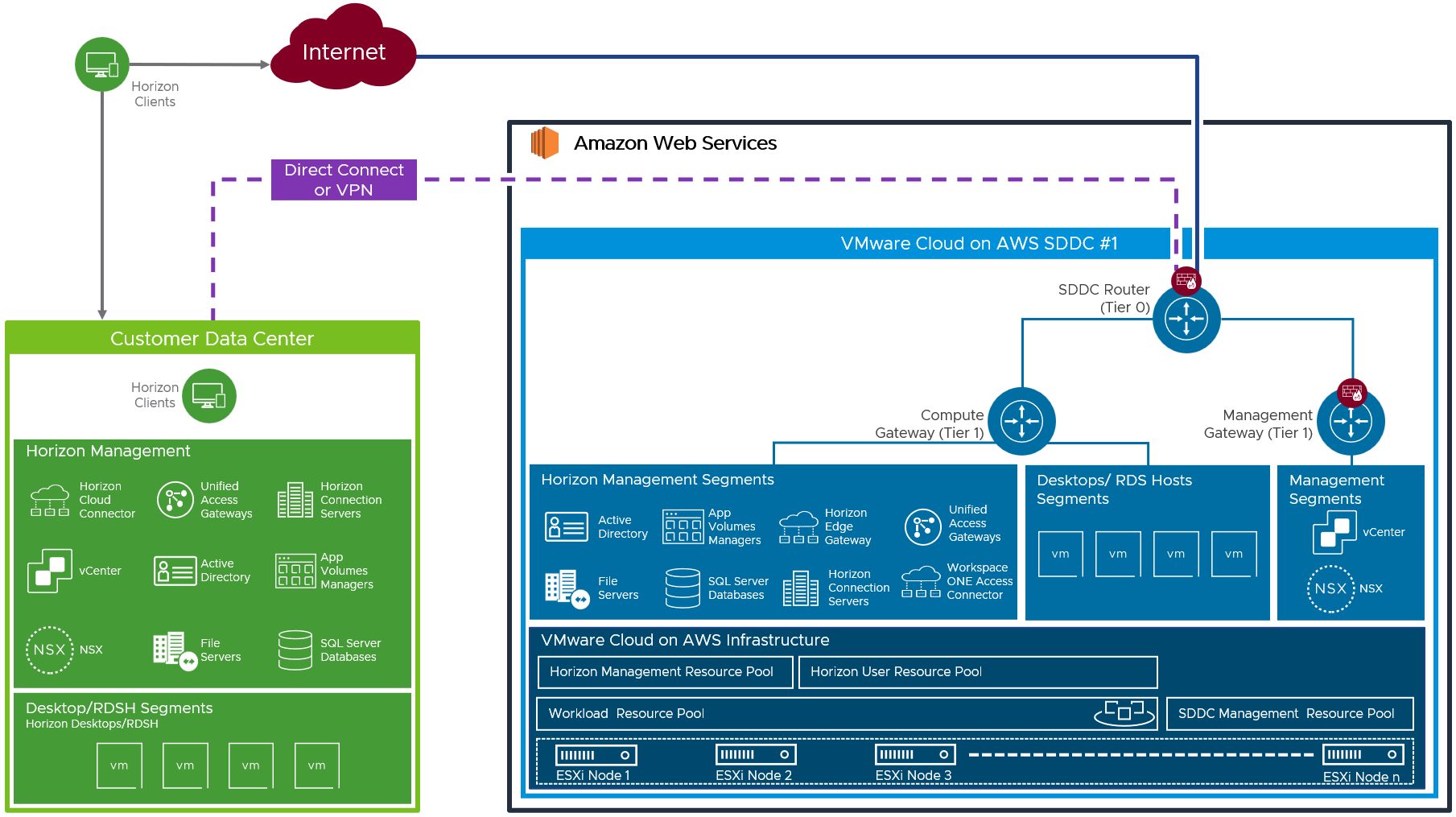

Networking to an On-Premises Data Center

When networking to an on-premises data center is required, either Direct Connect or a VPN can be used as described in the previous section.

Figure 13: VMware Cloud on AWS Networking to On-Premises

Table 6: Virtual Private Network Strategy

| Decision | A Virtual Private Network (VPN) was established between AWS and the on-premises environment. |

| Justification | Allow for extension of on-premises services into the VMware Cloud on AWS environment. |

Table 7: Domain Controller Strategy

| Decision | Domain controllers were deployed as a member of existing on-premises active directory domain. |

| Justification | A site was created to keep authentication traffic local to the site. This allows Group Policy settings to be applied locally. In the event of an issue with the local domain controller, authentication requests can traverse the VPN back to the on-premises domain controllers. |

Table 8: File Server Strategy

| Decision | A file server was deployed with DFS-R replication to on-premises file servers. |

| Justification | General file services for the local site. Allows the common DEM configuration data to be replicated to this site. Keeps the profile data for DEM local to the site, with a backup to on-premises. |

DHCP service

It is critical to ensure that all VDI enabled desktops have proper assigned IP address. In most cases, you opt for the automatic IP assignment.

VMware Cloud on AWS supports the following ways to assign IP addresses to clients:

- NSX-T based local DHCP service, attached to the Compute Gateway (default).

- DHCP Relay, customer managed.

Note: You cannot configure DHCP Relay if the Compute Gateway includes any segments that provide their own DHCP services.

Table 9: DHCP Service Strategy

| Decision | Local DHCP services were provided by the NSX-T services within the SDDC. DHCP was configured on the VDI and RDS Host network segments. |

| Justification | Providing local DHCP services reduces latency and any dependency on remote DHCP services and network links to those services. |

DNS Service

Reliable and correctly configured name resolution is a key for the successful hybrid Horizon deployment. While designing your environment make sure to understand DNS strategies for VMware Cloud on AWS. You design choice will directly influence the configuration details. You should configure:

- Management Gateway DNS. By default, Google DNS Servers are used. To be able to resolve on-premises resources (on-premises vCenter Server name, ESXi host names, and so on) you will need to specify your own DNS server, capable of resolving names of the mentioned resources.

- Set the DNS resolution of VMware Cloud on AWS vCenter to Private (VMware Cloud on AWS Console, Settings – vCenter FQDN – Resolution Address).

- Compute Gateway DNS. If you opt to use your own DHCP Relay, ensure that DNS Server option is configured correctly on your DHCP server specified as the relay. VMware recommends using local DNS Server (hosted on VMware Cloud on AWS) to reduce dependency on the connection link to on-premises. Choosing AWS native to host your DNS might be another option, as described below.

Table 10: DNS Service Strategy

| Decision | DNS services were provided by the new domain controllers deployed in VMware Cloud on AWS. |

| Justification | This allows DNS queries to be addressed locally. |

Data Egress Cost

Unlike on-premises deployments, deploying Horizon on VMware Cloud on AWS incurs data egress costs based on the amount of data egress traffic the environment will generate. It is important to understand and estimate the data egress traffic.

Understanding Different Types of Data Egress Traffic

Depending on the deployment use case, you may be incurring costs for some or all of the following types of data egress traffic:

- End-user traffic via the Internet – You have configured your environment where your end users will connect to their virtual desktops on VMware Cloud on AWS remotely via the Internet. Any data leaving the VMware Cloud on AWS data center will incur egress charges. Egress data consists of the following components: outbound data from Horizon protocols and outbound data from remote experience features (for example, remote printing). While the former is typically predicable, the latter has more variance and depends on the exact activity of the user.

- End-user traffic via the on-premises data center – You have configured your environment where your end users will connect to their virtual desktops on VMware Cloud on AWS via your on-premises data center. In this case, you will have to link your data center with the VMware Cloud on AWS data center using VPN or Direct Connect. Any data traffic leaving the VMware Cloud on AWS data center and traveling back to your data center will incur egress charges. And if you have Cloud Pod Architecture (CPA) configured between the on-premises environment and the VMware Cloud on AWS environment, you will incur egress charges for any CPA traffic between the two pods (although CPA traffic is typically fairly light).

- External application traffic – You have configured your environment where your virtual desktops on VMware Cloud on AWS must access applications hosted either in your on-premises environment or in another cloud. Any data traffic leaving the VMware Cloud on AWS data center and traveling to these other data centers will incur egress charges.

Note: Data traffic within your VMware Cloud on AWS organization or between the organization and AWS services in that same region is exempt from egress charges. However, any traffic from the organization to another availability zone or to another AWS region will be subject to egress charges.

Data ingress (that is, data flowing into the VMware Cloud on AWS data center) is free of charge.

Scaling Deployments

In this section, we will discuss options for scaling out Horizon on VMware Cloud on AWS, using the All-in-SDDC architecture, with a single SDDC and with multiple SDCCs.

One of the main constraints to consider is the amount of network traffic throughput capability of the NSX edge gateway components inside the SDDCs. The network throughput into and out of an SDDC limits the number of Horizon sessions each SDDC is capable of hosting.

When assessing the amount of network traffic that the Horizon sessions will consume, a variety of factors should be considered, including:

- Protocol traffic – Can be affected by the display resolution, number of displays, content type, and usage.

- User data – Where the user data is outside the SDDC or is replicated into or out of the SDDC.

- Application data – When applications used in the Horizon desktops or published applications reside outside the SDDC.

The number of Horizon sessions that can be hosted within a single SDDC depends on how much network traffic the sessions generate that needs to enter or exit the SDDC and therefore traverse the NSX edge gateways.

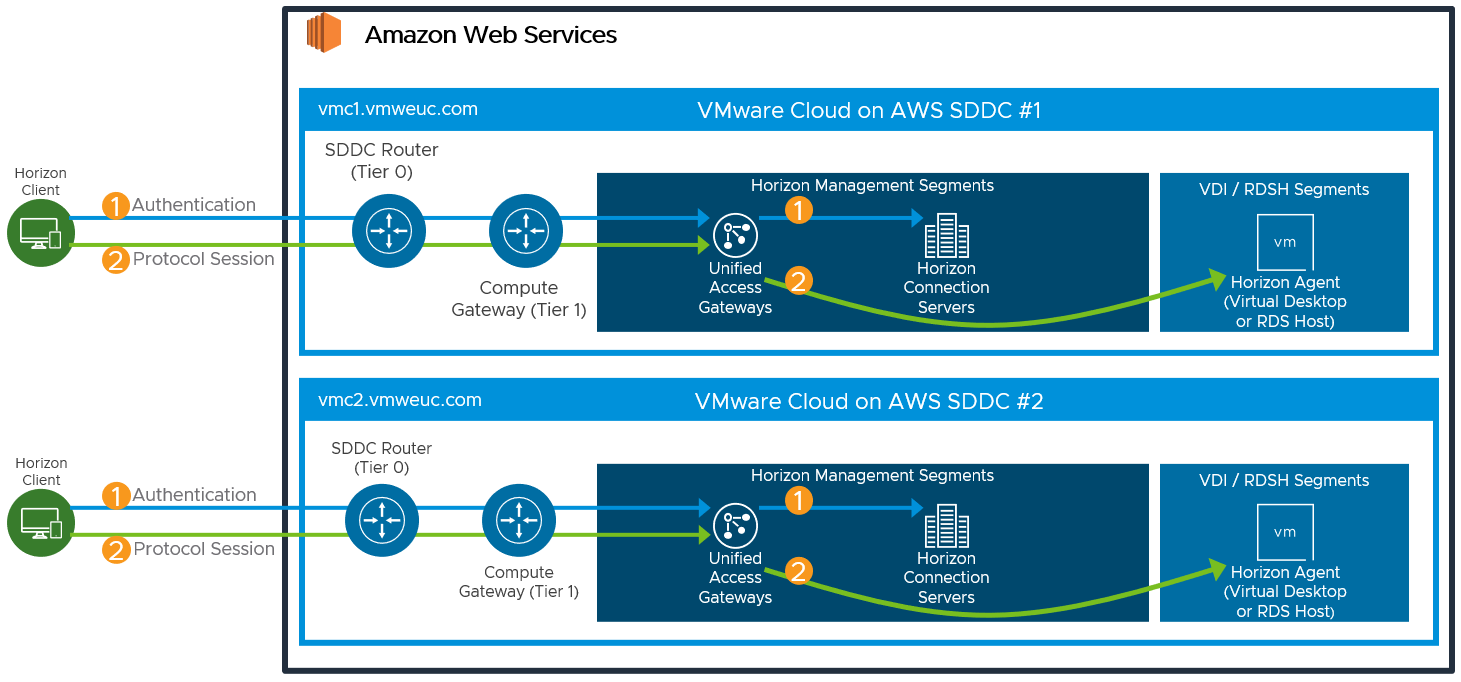

Single SDDC

The simplest deployment comprises of a single SDDC. This will also form the building block for multiple SDDC deployments.

- Resource pools for Horizon Management and Horizon User are created within the default created Workload resource pool.

- Network segments are added for External-DMZ, Internal-DMZ, Horizon-Management, and VDI and RDSH as detailed in Network Configuration.

The Horizon management components, such as the Horizon Connection Servers, Unified Access Gateways are built out and attached to the relevant network segment, inside the SDDC.

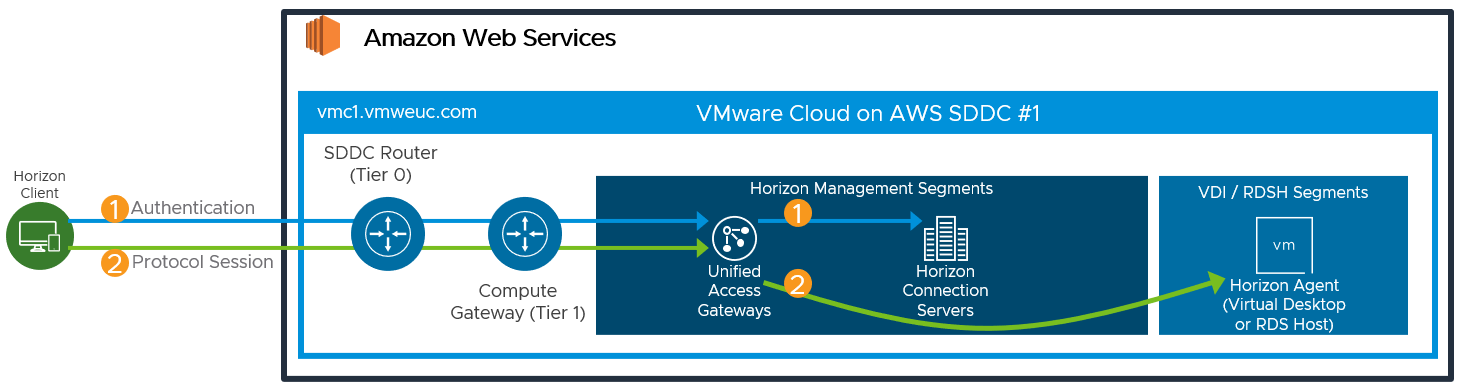

Figure 14: Logical Architecture for a Single SDDC with Networking

An FQDN (for example, vmc1.company.com) is bound to an external public IP address which will then be NATed to the HA virtual IP address of the Unified access Gateways. Each individual Unified Access Gateway will also need a public IP address and NAT rules to forward protocol traffic to them.

This FQDN will then be used to provide user access by either:

- Using the FQDN with the Horizon Client.

- Using Universal Broker and configuring the Pod External FQDN to use the pod FQDN.

- Integrating with Workspace ONE Access. See Horizon and Workspace ONE Access Integration in the Platform Integration chapter.

Figure 15: Horizon Connection Flow with Single SDDC

Networking to an on-premises data center, if required, can achieved by either using Direct Connect or a VPN as covered in the External Networking section earlier in this guide.

Figure 16: SDDC with Networking to On-Premises Data Center

To build out a full Horizon pod environment, the following components and servers were deployed.

Table 11: Connection Server Strategy

| Decision | Two Horizon Connection Servers were deployed. These ran on dedicated Windows 2019 VMs located in the Horizon-Management network segment. The Universal Broker plugin was installed on each Connection Server. |

| Justification | One Connection Server supports a maximum of 4,000 sessions. A second server provides redundancy and availability (n+1). The Universal Broker plugin is required if using Multi-Cloud Assignments with Universal Broker. |

Table 12: Unified Access Gateway Strategy

| Decision | Two standard-size Unified Access Gateway appliances were deployed as part of the Horizon solution. These are two NIC appliances with one NIC in the External-DMZ network segment and one in the Internal-DMZ network segment. |

| Justification | UAG provides secure external access to internally hosted Horizon desktops and applications. One standard UAG appliance is recommended for up to 2,000 concurrent Horizon connections. A second UAG provides redundancy and availability (n+1). |

Table 13: Workspace ONE Access Connector Strategy

| Decision | One Workspace ONE Access connector was deployed. |

| Justification | Connects to the Workspace ONE instance to integrate Horizon entitlements and allow for seamless brokering into desktops and applications. While more than one Workspace ONE Access connector could be deployed, other Workspace ONE Access connectors were already present in other locations which would provide redundancy in the case of an outage of this connector. |

Table 14: App Volumes Manager Strategy

| Decision | Two App Volumes Managers were deployed. |

| Justification | App Volumes is used to deploy applications locally. The two App Volumes Managers provide scale and redundancy. The two App Volumes Managers are load balanced with AWS Elastic Load Balancing, although any third-party load balancer could have been used. |

Table 15: Dynamic Environment Manager Strategy

| Decision | VMware Dynamic Environment Manager™ (DEM) was deployed on the local file server. |

| Justification | This is used to provide customization / configuration of the Horizon desktops. This location contains the Configuration and local Profile shares for DEM. |

Table 16: SQL Server Strategy

| Decision | A SQL server was deployed. |

| Justification | This SQL server was used for the Horizon Events Database and App Volumes. |

Multiple SDDC

To build environments capable of supporting larger numbers of users than a single SDDC can support, multiple SDDC environments can be deployed. As the All-in-SDDC architecture for Horizon on VMware Cloud on AWS places all the management components inside the SDDC, there are some considerations when scaling above a single SDDC, related to the traffic throughput of the NSX edge gateways.

The current recommendation is that a Horizon pod, based in VMware Cloud on AWS, should only contain a single SDDC. If multiple SDDCs were added to the same Horizon pod, user traffic might get routed through an initial SDDC to get to a destination SDDC, causing unnecessary traffic through the NSX edge gateways in the initial SDDC. See Protocol Traffic Hairpinning to understand this consideration.

Figure 17: Logical Architecture for Multiple SDDC

Each SDDC is built out as a separate Horizon pod following the same design outlined in the Single SDDC design. Each SDDC has its own distinct set of Horizon management components, including Connection Servers, Unified Access Gateways, App Volumes Managers, and Horizon Edge Gateway.

Figure 18: Multiple SDDC Deployment with Networking

Each Horizon pod, and therefore the SDDC that contains it, will have a dedicated FQDN for user access. For example, vmc1.company.com and vmc2.company.com. Each SDDC would have a dedicated public IP address that would correlate to its FQDN.

This avoids potential protocol traffic hairpinning through the wrong SDDC.

Figure 19: Horizon Connection Flow for Two SDDCs

Multiple Horizon Pods

When deploying environments with multiple Horizon Pods, there are different options available for administrating and entitling users to resources across the pods. This is applicable for Horizon environments on VMware Cloud on AWS, on other cloud platforms, or on-premises.

- The Horizon pods can be managed separately, users entitled separately to each pod, and users directed to the use the unique FQDN for the correct pod.

- Alternatively, you can manage and entitle the Horizon environments by linking them using either the Horizon Universal Broker or Cloud Pod Architecture (CPA).

Universal Broker offers several advantages over Cloud Pod Architecture:

- Multi-platform - Works with both Horizon and Horizon Cloud on Microsoft Azure.

- Smart brokering – Can broker resources from assignments to end users along the shortest network route, based on awareness of the geographical sites and pods topology.

- Simplified networking - No networking is required between the Horizon pods.

- Targeted - Avoids potential protocol traffic hairpinning between Horizon pods.

To understand the current limitations when using the Universal Broker, see Universal Broker - Feature Considerations and Known Limitations.

Protocol Traffic Hairpinning

One consideration to be aware of is potential hairpinning of the Horizon protocol traffic through another Horizon Pod or SDDC than the one the user is consuming a Horizon resource from. This can occur if the users’ session is initially sent to the wrong SDDC for authentication.

With each SDDC, all traffic into and out of the SDDC goes via the NSX edge gateways and there is a limit to the traffic throughput. In the All-in-SDDC architecture, the management components, including the Unified Access Gateways, are located inside the SDDC, and authentication traffic has to enter an SDDC and pass through the NSX edge gateway.

If the authentication traffic is not precisely directed, such as using multi-cloud assignments with Universal Broker, or using a unique namespace for each Horizon Pod, the user could be directed to any Horizon pod in its respective SDDC. If the users’ Horizon resource is not in that SDDC where they are initially directed for authentication, their Horizon protocol traffic would go into the initial SDDC to a Unified Access Gateway in that SDDC and then back out via the NSX edge gateways and then be directed to where their desktop or published application is being delivered from.

This causes a reduction in achievable scale due to this protocol hairpinning. For this reason, caution should be exercised if using Horizon Cloud Pod Architecture.

Figure 20: Potential Horizon Protocol Traffic Hairpinning with All-in-SDDC Deployments

Licensing

Enabling Horizon to run on VMware Cloud on AWS requires you to acquire capacity on VMware Cloud on AWS and a Horizon subscription license.

To enable the use of subscription licensing, each Horizon pod must be connected to the Horizon Cloud Service. For more information on how to do this, see the Horizon Cloud Service section of the Horizon 8 Architecture chapter.

For a POC or pilot deployment of Horizon on VMware Cloud on AWS, you may use a temporary evaluation license or your existing perpetual license. However, to enable Horizon for production deployment on VMware Cloud on AWS, you must purchase a Horizon subscription license. For more information on the features and packaging of Horizon subscription licenses, see VMware Horizon Subscription - Feature comparison.

You can use different licenses on different Horizon pods, regardless of whether the pods are connected by CPA. You cannot mix different licenses within a pod because each pod only takes one type of license. For example, you cannot use both a term license and a subscription license for a single pod. You also cannot use both the Horizon Apps Universal Subscription license and the Horizon Universal Subscription license in a single pod.

Preparing Active Directory

Horizon requires Active Directory services. For supported Active Directory Domain Services (AD DS) domain functional levels, see the VMware Knowledge Base (KB) article: Supported Operating Systems and MSFT Active Directory Domain Functional Levels for VMware Horizon 8 2006 (78652).

If you are deploying Horizon in a hybrid cloud environment by linking an on-premises environment with a VMware Cloud on AWS Horizon pod, you should extend the on-premises Microsoft Active Directory (AD) to VMware Cloud on AWS.

Although you could access on-premises active directory services and not locate new domain controllers in VMware Cloud on AWS, this could introduce undesirable latency.

A site should be created in Active Directory Sites and Services and defined to the subnets containing the Domain Controller(s) in VMware Cloud on AWS. This will keep the active directory services traffic local.

Table 17: Active Directory Strategy

| Decision | Active Directory domain controllers were installed into VMware Cloud on AWS. |

| Justification | Locating domain controllers in AWS reduces latency for Active Directory queries, DNS, and KMS. |

Shared Content Library

Content Libraries are container objects for VM, vApp, OVF templates, and other types of files, such as templates, ISO images, text files, and so on. vSphere administrators can use the templates in the library to deploy virtual machines and vApps in the vSphere inventory. Sharing golden images across multiple vCenter Server instances between multiple VMware Cloud on AWS and/or on-premises SDDCs guarantees consistency, compliance, efficiency, and automation in deploying workloads at scale.

For more information, see Using Content Libraries in the vSphere Virtual Machine Administration guide in the VMware vSphere documentation.

Using Native AWS Services

Combining Horizon with native AWS services and VMware Cloud on AWS allows organizations to get the best of both worlds – easily consumed cloud services to provision and operate enterprise applications with a platform that requires few changes to the applications themselves and operational processes.

Utilizing native AWS services like those listed in this section has additional benefits for EUC environments. By utilizing native AWS services, resources that would otherwise be reserved for and consumed by servers can now be utilized for desktops, providing a greater desktop density. The following section explores and details the AWS services that are complimentary to Horizon on VMware Cloud on AWS.

The following subsections detail how you can integrate Horizon on VMware Cloud on AWS with native AWS services.

AWS Direct Connect

Simplify migration and increase interoperability between your data center and VMware Cloud on AWS with AWS networking service AWS Direct Connect. This service uses a dedicated, secure network connection to an organization’s on-premises data center. AWS Direct Connect can provide a more consistent network experience than VPN connectivity via the public internet.

AWS Direct Connect offers bandwidth flexibility, and 1Gbps and 10Gbps are available to suit the requirements of organizations. Additionally, AWS Direct Connect is compatible with all AWS services, including VMware Cloud on AWS.

For Horizon deployments, AWS Direct Connect enables users to connect to resources that are yet to migrate to the cloud and are hosted in an on-premises datacenter, and/or to their virtual desktop from an organization’s premises with predictable, consistent performance.

Elastic Load Balancing

Elastic Load Balancing automatically distributes traffic across a number of resources for the purposes of performance, scale, and availability. AWS offers an Application Load Balancer (ALB) and a Network Load Balancer (NLB). ALB is suited to web traffic such as HTTP and HTTPS. NLB is aimed at TCP, UDP and TLS where performance is a priority consideration and requirement. These load-balancers can help reduce the complexity of an organization’s environment.

In a Horizon environment ALB and NLB can replace existing 3rd party load balancers to scale and protect the Unified Access Gateways and Connection servers. This can reduce cost and complexity of a deployment by eliminating additional 3rd party components and utilizing native AWS Services.

Amazon FSx

Leverage Amazon FSx for scalable, elastic VDI workload storage either or on-premises or AWS. Amazon FSx provides the native compatibility of third-party file systems, this reduces the administrative overhead of provisioning and managing file servers and storage. It also automates additional administrative tasks such as hardware provisioning, software configuration, patching, and backups.

Amazon FSx for Windows File Server is built on Microsoft Windows Server and includes full support for the Server Message Block (SMB) protocol, Windows New Technology File System (NTFS), Active Directory integration, and Distributed File System (DFS). It is built on solid state drive (SSD) storage, is fully managed, and comes complete with automated backups.

Amazon FSx for Windows File Server provides a fully managed, native AWS shared file system for in-guest file storage to the Horizon Desktops that reside in your VMware Cloud on AWS SDDC.

File systems ranging from 300 GiB to 65,536 GiB in storage capacity can be created, including throughput capacity ranging from 8 MB/s to 2048 MB/s. There is no need to worry about capturing and storing backups, or how to restore from a backup in a disaster recovery event.

To gain an understanding of how Amazon FSx for Windows File Server can be used within a VMware Horizon, consider the following example. To consolidate data centers and migrate a large Horizon VDI environment to the cloud, a large set of user data are stored in native Windows file shares. To accelerate the migration process, VMware Cloud on AWS is employed to remove the need for re-tooling or re-platforming the VDI environment and Amazon FSx for Windows File Server is utilized to provide the SMB shares required for user home directories and group shared folders.

Amazon Route 53

Seamlessly integrate the Amazon Route 53 Domain Naming System (DNS) with your virtual desktops and applications to simplify DNS management for VDI. Amazon Route 53 is a scalable, highly available DNS service, designed to give organizations a simple, reliable, and cost-effective way to connect users to resources. With Amazon Route 53 Traffic Flow global traffic management ID simplified, various routing options are available, such as Latency Based Routing, Geo DNS, Geo-proximity, and Weighted Round Robin.

Organizations looking to deploy Horizon on VMware Cloud on AWS in multiple AWS Regions, or simply manage a DNS zone, can utilize Amazon Route 53. To manage DNS failover between the locations, this highly available and scalable cloud DNS service enables organizations to deliver a reliable and cost-effective way to route end users to Horizon deployments, as well as Internet applications either on or outside of AWS.

AWS Directory Service for Microsoft Active Directory

Manage Horizon workloads using AWS Directory Service for Microsoft Active Directory (also known as AWS Managed Microsoft AD). AWS Managed Microsoft AD provides a managed Active Directory in the AWS Cloud. Built on an actual Active Directory, AWS Managed Microsoft AD does not require the synchronization or replication of data from an existing Active Directory. Standard AWS Managed Microsoft AD allows for the use of the usual Active Directory administration tools, such as Active Directory User and Computers and Group Policy Management Console.

AWS Managed Microsoft AD enables the simple migration of Horizon workloads without the need to build out a full Active Directory infrastructure while still leveraging all the management tools familiar to them. Trust relationships are available with existing Active Directories to extend and leverage the existing user credentials to access Horizon resources. Each Directory is built across multiple Availability Zones, failures are automatically detected, and failed Domain Controllers are replaced. Data replication, backup, patching, and updates are all managed.

Amazon Relational Database Service

Accelerate the storage and retrieval of data in your database virtual desktop and virtual application environment using the Amazon Relational Database Service (Amazon RDS). Amazon RDS provides a managed database service, management of the underlying EC2 instance and the operating system are abstracted from the organization. Administrative tasks such as provisioning, backup, and updates are all managed by AWS.

Several database engines are available for Amazon RDS, including Amazon Aurora, Microsoft SQL, PostgreSQL, MySQL, MariaDB, and Oracle.

Amazon RDS is highly scalable scaling operation that takes just a few clicks in the AWS console or via a simple API call. With On Demand and Reserved Instance pricing available, Amazon RDS offers organizations an inexpensive way to provision their database requirement.

In a Horizon environment Amazon RDS with the Microsoft SQL server database engine can be utilized to host the View Events database. This removes the need for VDI administrators to build and manage complex and costly SQL Server environments.

Resource Sizing

Memory Reservations

Because physical memory cannot be shared between virtual machines, and because swapping or ballooning should be avoided at all costs, be sure to reserve all memory for all Horizon virtual machines, including management components, virtual desktops, and RDS hosts.

CPU Reservations

CPU reservations are shared when not used, and a reservation specifies the guaranteed minimum allocation for a virtual machine. For the management components, the reservations should equal the number of vCPUs times the CPU frequency. Any amount of CPU reservations not actively used by the management components will still be available for virtual desktops and RDS hosts when they are deployed in same the SDDC.

Virtual Machine–Level Reservations

As well as setting a reservation on the resource pool, be sure to set a reservation at the virtual machine level. This ensures that any VMs that might later get added to the resource pool will not consume resources that are reserved and required for HA failover. These VM-level reservations do not remove the requirement for reservations on the resource pool. Because VM-level reservations are taken into account only when a VM is powered on, the reservation could be taken by other VMs when one VM is powered off temporarily.

Leveraging CPU Shares for Different Workloads

Because RDS hosts can facilitate more users per vCPU than virtual desktops can, a higher share should be given to them. When desktop VMs and RDS host VMs are run on the same cluster, the share allocation should be adjusted to ensure relative prioritization.

As an example, if an RDS host with 8 vCPUs facilitates 28 users and a virtual desktop with 2 vCPUs facilitates a single user, the RDS host is facilitating 7 times the number of users per vCPU. In that scenario, the desktop VMs should have a default share of 1000, and the RDS host VMs should have a vCPU share of 7000 when deployed on the same cluster. This number should also be adjusted to the required amount of resources, which could be different for a VDI virtual desktop session versus a shared RDSH-published desktop session.

Table 18: Reservations and Shares Overview

|

| Resource Pool Reservation | VM Reservation | Shares | ||

| Memory | CPU | Memory | CPU | ||

| Management | Full | Full (vCPU*Freq) | Full | Full (vCPU*Freq) | No |

| VDI | Full | No | Full | No | Default |

| RDSH | Full | No | Full | No | By ratio |

Deploying Desktops

With Horizon on VMware Cloud on AWS, both Instant Clones and full clones can be utilized. Instant Clones coupled with App Volumes and Dynamic Environment Manager help accelerate the delivery of user-customized and fully personalized desktops.

Instant Clones

Dramatically reduce infrastructure requirements while enhancing security by delivering a brand-new personalized desktop and application services to end users every time they log in:

- Reap the economic benefits of stateless, nonpersistent virtual desktops served up to date upon each login.

- Deliver a pristine, high-performance personalized desktop every time a user logs in.

- Improve security by destroying desktops every time a user logs out.

For more information on Instant clones, see Instant Clone Smart Provisioning.

App Volumes

App Volumes provides real-time application delivery and management for on-premises and on VMC:

- Quickly provision applications at scale.

- Dynamically attach applications to users, groups, or devices, even when users are already logged in to their desktop.

- Provision, deliver, update, and retire applications in real time.

- Provide a user-writable volume, allowing users to install applications that follow them across desktops.

- Provide end users with quick access to a Windows workspace and applications, with a personalized and consistent experience across devices and locations.

- Simplify end-user profile management by providing organizations with a single and scalable solution that leverages the existing infrastructure.

- Speed up the login process by applying configuration and environment settings in an asynchronous process instead of all at login.

- Provide a dynamic environment configuration, such as drive or printer mappings, when a user launches an application.

For design guidance, see App Volumes Architecture.

For more information on how to transfer packages from an on-premises instance of App Volumes to an instance based on VMware Cloud on AWS, see Transfer Volumes for VMware Cloud on AWS.

Using NFS Datastores on VMware Cloud on AWS

When deployed on VMware Cloud on AWS, App Volumes can use AWS managed external NFS datastores to assist in the replication of packages from one App Volumes instance to another. AWS managed external NFS datastores are provided by Amazon FSx for NetApp ONTAP.

These NFS datastores can be attached to VMware Cloud on AWS vSphere clusters and used as shared datastores between different vSphere clusters to facilitate App Volumes package replication between App Volumes instances.

For more information on using Amazon FSx for NetApp ONTAP see:

- VMware Cloud on AWS integration with Amazon FSx for NetApp ONTAP

- Configure Amazon FSx for NetApp ONTAP as External Storage

Dynamic Environment Manager

Use VMware Dynamic Environment Manager for application personalization and dynamic policy configuration across any virtual, physical, and cloud-based environment. Install and configure Dynamic Environment Manager on VMware Cloud on AWS just like you would install it on-premises.

For more information, see Dynamic Environment Manager Architecture.

Deploying External Storage for User Data

User data is an important consideration when thinking about deploying Horizon on VMware Cloud on AWS. For storing user profiles and user data, you can either deploy a Windows file share on VMware Cloud on AWS (and use DFS-R to replicate data across multiple sites) or use external storage, such as Dell EMC Unity Cloud Service.

Dell EMC Unity Cloud Edition provides a ready-made solution for storing file data such as user home directories and can be easily deployed alongside Horizon on VMware Cloud on AWS. Dell EMC Unity Cloud Edition also supports Cloud Sync for replicating data between Dell EMC Unity systems on premises and VMware Cloud on AWS.

For deployment details, see Dell EMC Unity Cloud Edition with VMware Cloud on AWS Whitepaper and video.

Summary and Additional Resources

Now that you have come to the end of this Horizon on VMware Cloud on AWS design chapter, you can return to the landing page and use the tabs, search, or scroll to select your next chapter in one of the following sections:

- Overview chapters provide understanding of business drivers, use cases, and service definitions.

- Architecture chapters give design guidance on the products you are interested in including in your platform, including Workspace ONE UEM, Workspace ONE Access, Workspace ONE Assist, Workspace ONE Intelligence, Horizon Cloud Service, Horizon, App Volumes, Dynamic Environment Manager, and Unified Access Gateway.

- Integration chapters cover the integration of products, components, and services you need to create the platform capable of delivering the services that you want to deliver to your users.

- Configuration chapters provide reference for specific tasks as you build your platform, such as installation, deployment, and configuration processes for Workspace ONE, Horizon Cloud Service, Horizon, App Volumes, Dynamic Environment Management, and more.

Additional Resources

For more information about VMware Horizon on VMware Cloud on AWS, you can explore the following resources:

- VMware Horizon on VMware Cloud on AWS product page

- VMware Horizon on VMware Cloud on AWS documentation

Changelog

The following updates were made to this guide:

| Date | Description of Changes |

| 2023-07-19 |

|

| 2022-09-08 |

|

Author and Contributors

This chapter was written by:

- Graeme Gordon, Senior Staff End-User-Computing (EUC) Architect in End-User-Computing Technical Marketing, VMware.

- Hilko Lantinga, Staff Engineer 2 in EUC R&D, VMware.

Feedback

Your feedback is valuable.

To comment on this paper, contact VMware End-User-Computing Technical Marketing at euc_tech_content_feedback@vmware.com.